Large Language Models in Business

Applications and Impact (2025)

Executive Summary

This report provides a comprehensive analysis of how Large Language Models (LLMs) are transforming business operations across key sectors in 2025. As organizations increasingly integrate these powerful AI systems into their workflows, we examine real-world applications, implementation approaches, strategic impacts, and the competitive landscape of major LLM providers.

Our findings reveal that LLMs have moved beyond experimentation to become critical business infrastructure, with the global LLM market projected to grow from $6.4 billion in 2024 to $36.1 billion by 2026, representing a CAGR of 33.2%. Organizations implementing LLMs effectively are realizing an average ROI of 41%, with technology companies seeing the highest returns (45%), closely followed by financial services (41%).

The report highlights key trends across startups, healthcare, and financial services, providing detailed insights into implementation strategies, challenges, and opportunities. Technical considerations including API vs. self-hosting, data privacy, and integration approaches are thoroughly explored to guide decision-makers in optimal deployment.

Table of Contents

Introduction to LLMs in Business

Large Language Models (LLMs) have rapidly evolved from research curiosities to essential business tools. These AI systems, exemplified by models like OpenAI's GPT-4, Anthropic's Claude, and open-source alternatives like Meta's LLaMA, can understand and generate human-like text, enabling them to perform tasks that traditionally required human intelligence.

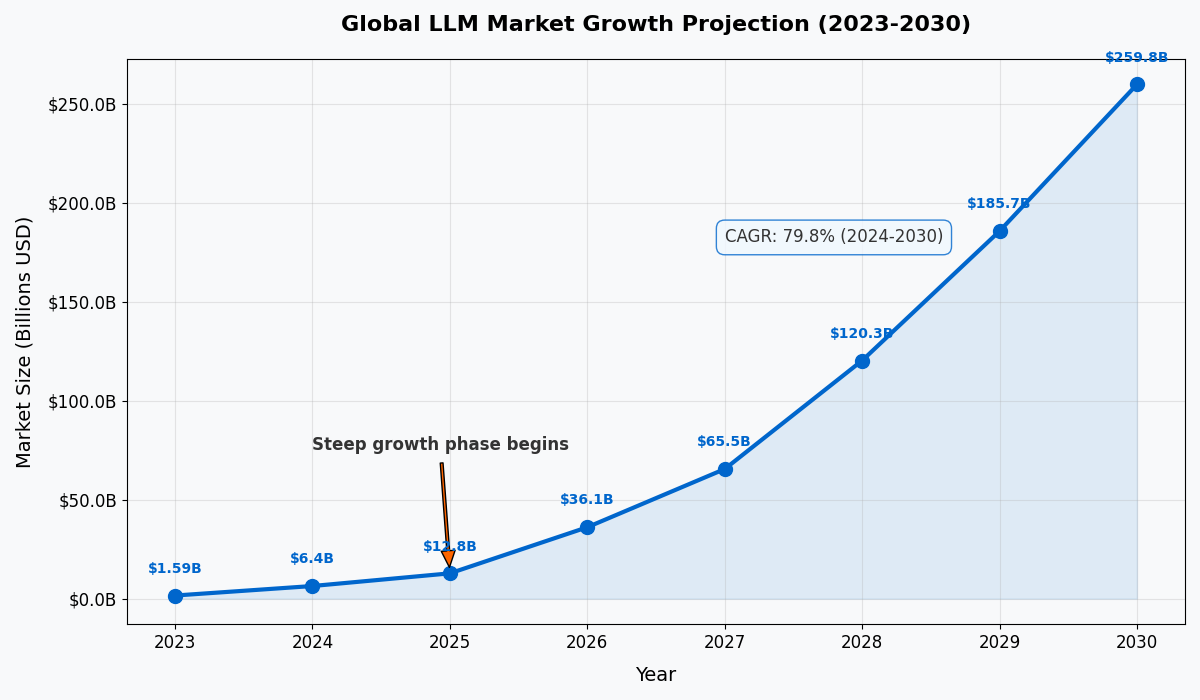

Figure 1: Global LLM market is projected to grow exponentially from $1.59B in 2023 to $259.8B by 2030

Since the breakthrough public launch of ChatGPT in late 2022, companies across industries have been exploring LLMs to streamline operations, enhance customer experiences, and unlock new value. In fact, recent analyses suggest generative AI could add trillions of dollars in economic value globally, with banking alone potentially seeing $200–$340 billion in annual impact (equivalent to 9–15% of the sector's operating profits).

The adoption of LLMs is happening unevenly across sectors, with technology, financial services, and professional services leading the way. This report explores how businesses are leveraging LLMs in 2025, covering key use cases and sector-specific applications with a focus on startups, healthcare, and finance. We'll also discuss technical considerations (APIs, fine-tuning, infrastructure, privacy/security) as well as strategic factors (ROI, workflow integration, vendor selection, competitive differentiation).

Market Growth and ROI Analysis

The global LLM market is experiencing unprecedented growth. Starting at just $1.59 billion in 2023, it's projected to reach $259.8 billion by 2030, representing a compound annual growth rate (CAGR) of nearly 80% from 2024 to 2030. This explosive growth is driven by increasing enterprise adoption, expanding use cases, and the competitive advantage gained by early adopters.

Figure 2: Return on Investment (ROI) from LLM implementation varies by industry, with Technology and Financial Services seeing the highest returns

ROI measurements for LLM implementations show impressive results across sectors, with an average 41% return according to Snowflake's research on early AI adopters. Notably, 92% of early adopters report that their AI investments are already paying for themselves, and 98% plan to increase AI investments in 2025.

Key ROI Drivers for LLM Implementations:

- Productivity gains through automation of routine tasks

- Enhanced customer experiences driving higher satisfaction and retention

- Accelerated innovation and product development cycles

- Cost reduction through streamlined operations

- Improved decision-making through better data analysis and insights

Regional adoption varies significantly, with North America leading ($848.65 million in 2023, projected to reach $105.55 billion by 2030), followed by the Asia-Pacific region ($416.56 million in 2023, projected to reach $94.03 billion by 2030). Europe is expected to grow from $270.61 million in 2023 to $50.09 billion by 2030.

"If you think AI will shrink your workforce, think again. You're going to welcome a host of new members to the team this year: digital workers known as AI agents. They could easily double your knowledge workforce and those in roles like sales and field support, transforming your speed to market, customer interactions, product design and so on."

Major Use Cases for LLMs in Business

LLMs are versatile general-purpose systems, but a few key use cases have emerged as especially valuable for businesses. According to McKinsey, about 75% of the current value from generative AI comes from four areas: customer engagement, content synthesis ("virtual experts"), content generation, and software coding.

Customer Support and Service

AI-powered chatbots and assistants handle a large volume of customer inquiries through natural conversations. Modern LLM-driven bots understand free-form questions, maintain context, and deliver personalized answers. Companies report significant efficiency gains and cost savings, with one SaaS startup seeing customer satisfaction scores jump from 35% to 75% while support staffing costs dropped by 50%.

Content Creation and Copywriting

LLMs generate human-like text for blog posts, marketing copy, and product descriptions. Companies report higher content output and faster campaign turnaround, with McKinsey finding that marketing campaigns that once took months can now be executed in days, often with personalization at scale and automated content testing. LLMs can increase marketing productivity by 5-15% of total spend (worth an estimated $463 billion annually).

Data Analysis and Decision Support

LLMs provide natural language interfaces to data, letting non-technical users glean insights through conversational queries. They also excel at reading and summarizing large documents. Morgan Stanley's wealth management division reported that document access efficiency jumped from 20% to 80% after implementing GPT-4 to help advisors quickly find answers in their massive knowledge base.

Software Development and Code Generation

LLM-based tools like GitHub Copilot assist developers at every coding stage. Studies show developers using Copilot can code up to 55% faster on routine tasks with a 39% improvement in code quality. Benefits include autocompletion, debugging assistance, documentation generation, and accelerated learning of new programming languages or APIs.

Marketing and Sales Automation

Beyond content generation, LLMs transform marketing automation and sales enablement. They enable hyper-personalization in marketing, automate customer interactions to qualify leads, analyze customer sentiment from reviews and surveys, and assist sales teams in writing customized proposals and follow-ups.

McKinsey notes that marketing and sales is one of the top functions that could capture 75% of generative AI's total business value. Early adopters report faster campaign deployment and the ability to respond to customer behaviors in real-time.

Other emerging use cases include language translation for global operations, education and training, HR and recruiting, and even cybersecurity (analyzing logs or phishing emails). Each new use case comes with domain-specific challenges, but the breadth of applications continues to grow as technology improves and businesses gain confidence in AI solutions.

LLMs in Startups and Tech Innovators

Startups have been at the forefront of LLM adoption, both as early adopters using LLMs to boost their operations, and as builders of new LLM-powered products.

Lean Operations

Small startups, which often lack large staffs, use LLM APIs to punch above their weight. A lean SaaS startup cut support costs in half with an LLM chatbot while improving customer satisfaction. Similar efficiency gains are seen in marketing (automating social media posts, generating press releases), coding (building products faster with fewer developers), and business analysis (getting market insights without a dedicated analyst team).

Essentially, LLMs act as force multipliers, allowing a startup team of 5 to accomplish what might normally require 15 people in various roles. This improves speed and helps startups iterate quickly on their ideas.

Product Innovation

The generative AI boom has given rise to numerous AI-native startups whose core offerings are built on LLMs. These range from AI writing assistants and customer service bots to AI tutors, legal document analyzers, financial advisors, and more.

Such startups typically use a combination of foundation models from providers like OpenAI or Anthropic and custom fine-tuning or prompt engineering to specialize the AI for a particular domain. Many of these companies began appearing in 2023 and have proliferated into 2025, creating a vibrant ecosystem of specialized LLM applications.

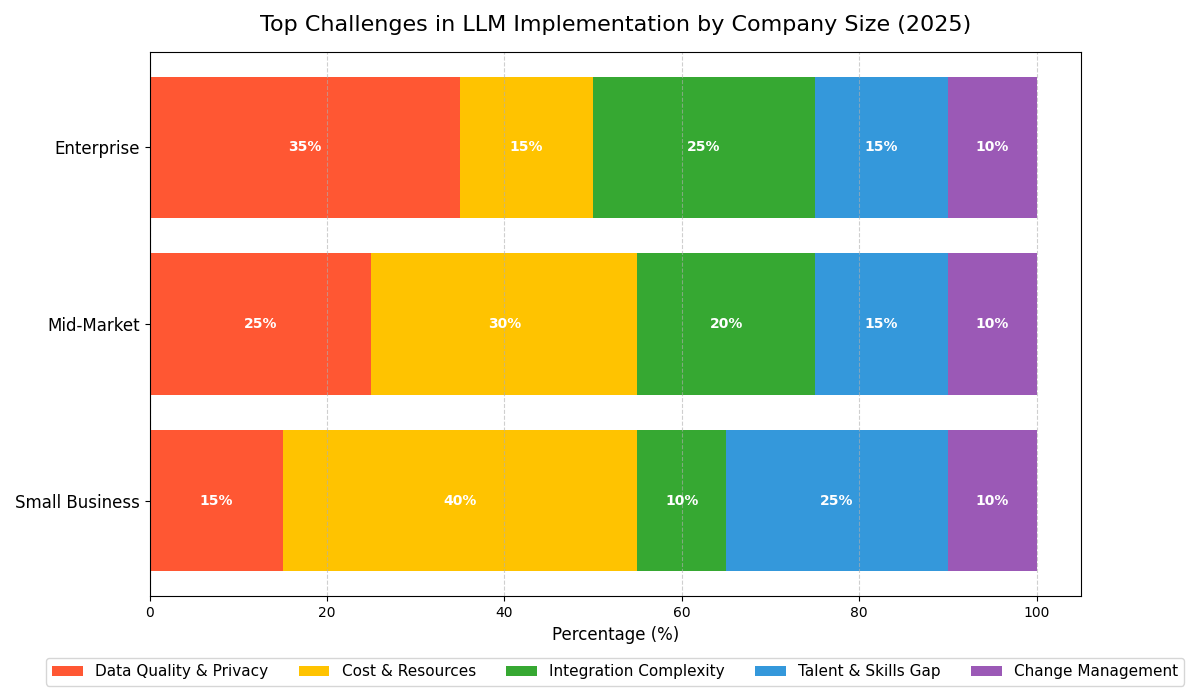

Figure 3: Implementation challenges vary by company size, with small businesses particularly concerned about costs and resources

Infrastructure Choices and Funding

Startups tend to favor cloud-based APIs for LLM access due to ease of use – it requires no machine learning infrastructure on their part. With just a few lines of code, they can integrate powerful AI capabilities into their app or workflow. This is ideal in early stages but can incur significant costs as usage scales.

Some growing startups explore open-source LLMs (like Meta's LLaMA family) which they can run more cheaply in-house once they can afford dedicated GPU servers. The trade-off is technical complexity. A common pattern is: prototype with a top-tier model via API, then later transition to a fine-tuned open model for cost or customization.

The excitement around LLMs led to substantial venture funding for AI startups in 2023–2024. To differentiate in competitive markets, startups focus on proprietary data or superior integration. For instance, an AI customer support startup might integrate deeply with popular CRM systems or offer guarantees of data privacy that generic services don't.

Example: Notion and Productivity Startups

Many productivity startups (Notion, Coda, etc.) integrated LLM features to stay competitive – offering "AI assistants" in their apps to draft content or summarize notes. This trend indicates that LLM integration is becoming a baseline feature expected by users. Startups that embraced it quickly gained user interest, whereas those that didn't risked appearing outdated. By 2025, even non-AI startups often include an AI component in their product, whether it's writing help in a notes app or AI analysis in a fintech app.

LLMs in Healthcare

The healthcare industry presents enormous opportunities for LLMs, but adoption has been cautious due to the high stakes of accuracy, privacy, and compliance. Still, we are seeing hospitals, health startups, and pharma companies explore LLM applications in administrative, clinical, and research contexts.

Patient Interaction and Triage

Healthcare providers are beginning to use LLM-powered chatbots to interact with patients for non-critical needs. For instance, scheduling and basic inquiries can be handled by AI assistants. UT Health Houston partnered with OpenAI to develop voice-based GPT models integrated into their scheduling system. Patients can call in 24/7 and converse with the AI to book appointments or provide information, reducing hold times and freeing staff.

Importantly, these systems are not used for actual medical decision-making – they strictly handle information collection and routine dialogues, then hand off to human professionals as needed.

Clinical Support (with Caution)

Direct clinical use of LLMs is still experimental given the risks. However, clinicians are using LLMs as assistive tools. A growing use case is summarizing patient records or doctor's notes. Doctors face heavy documentation burdens, and LLMs can draft these documents or highlight relevant info from lengthy records, which the physician can then review and edit.

Healthcare AI startups like ScribeAssist or Abridge offer services combining speech recognition with LLM summarization to automatically produce clinical notes after a visit. Thorough human verification is mandatory to ensure accuracy and that no critical detail is missed.

Medical Research and Administrative Efficiency

Healthcare institutions leverage LLMs for training and research support. For example, GPT-based tools can help write code for research analyses, generate hypotheses by reviewing literature, or create educational materials. By speeding up coding and literature review, researchers can iterate on experiments faster.

Hospitals are filled with text-heavy administrative tasks – from insurance claims processing to generating discharge instructions. LLMs are beginning to automate these areas. Insurance and billing departments experiment with AI to interpret denial letters and draft responses or to categorize clinical notes for coding and billing. One health system is using AI to structure data from free-text inputs, which helps in quality improvement efforts.

Privacy and Compliance Considerations

Healthcare is highly regulated (e.g., HIPAA in the U.S. mandates strict protection of patient health information). This means any LLM deployment must ensure patient data is secure. Many providers will not use public LLM APIs out-of-the-box for PHI data. Instead, they opt for solutions like Azure OpenAI Service with a HIPAA BAA (Business Associate Agreement) or on-premise models.

UT Health Houston specifically used OpenAI models through Microsoft Azure's HIPAA-compliant offering and signed a BAA to ensure legal compliance. They also implemented safeguards: no patient data submitted to the AI is retained or used to train the model, and privacy is "protected by design".

In summary, healthcare organizations are leveraging LLMs mainly to reduce administrative burdens, enhance patient communication, and support education/research, all while keeping humans in the loop. The sector's unique demands mean progress is measured and focused on augmenting healthcare professionals, not replacing them.

"We're not using AI for clinical decision support… instead it's a tool to assist with tasks… to help physicians improve efficiency."

LLMs in Financial Services

The finance industry – including banking, investment, and insurance – has been quick to explore LLMs, driven by competitive pressures to enhance customer service, improve analytics, and reduce costs. Banks operate with vast amounts of textual data (reports, communications, regulations) and have many customer touchpoints that can be optimized.

Customer Service and Advice

Many banks and insurance firms have introduced AI chatbots on their websites or apps to handle customer inquiries. These LLM-powered assistants can address questions about account balances, reset passwords, explain credit card rewards, or even help fill out loan applications through a conversational interface.

Banking chatbots use LLMs to provide 24/7 support for routine queries with answers drawn from the bank's policy manuals and FAQs. They also assist in guiding customers through complex forms by breaking down the process into simple questions. Beyond support, some are experimenting with personal financial advice chats – giving tips on budgeting or savings based on the user's data.

Internal Knowledge Management

Large financial institutions are using LLMs internally to help employees navigate vast information repositories. A marquee example is Morgan Stanley's "AI @ Morgan Stanley" assistant for wealth management advisors. It uses OpenAI's GPT-4 to let the firm's ~16,000 financial advisors query a massive internal knowledge base of research and data.

Advisors can ask questions like "What are the key points from our latest Tesla stock analysis?" and get an immediate, compliance-approved answer synthesized from internal documents. Over 98% of Morgan Stanley advisor teams actively use this AI assistant, and access to documents improved from 20% to 80%, meaning information that was previously hard to find is now readily accessible.

Document Processing and Risk Management

Finance is document-heavy – loan agreements, insurance claims, regulatory filings, earnings reports, research papers, and more. LLMs are being deployed to parse and summarize documents at scale. Investment analysts use LLMs to summarize SEC filings or earnings call transcripts, pulling out sentiment and key metrics for quick review.

Banks are also using LLMs for compliance – scanning through communications for any red flags or summarizing new regulations across jurisdictions. One leading bank reported that by using generative AI, they were close to cutting the time to produce an investment brief by 90% (from 9 hours to 30 minutes).

In the developing area of risk management and fraud detection, LLMs can analyze patterns in transaction descriptions or customer messages to identify potential fraud or risk signals. For example, an LLM might flag if multiple customers are complaining in a similar way about a potential fraud scheme, alerting the bank to investigate.

Regulatory and Security Considerations

Finance is acutely aware of data confidentiality (sensitive customer financial data, trading strategies, etc.) and regulatory compliance. As such, many financial institutions are cautious about using public LLM services.

We see a trend of banks working with vendors who offer private deployments. For example, Cohere has targeted enterprises by offering options for on-prem or virtual private cloud model hosting, so that a bank can use their large language model behind the bank's firewall. OpenAI, via Azure, also provides isolated instances for big customers.

The emphasis is on keeping proprietary data safe and ensuring AI outputs don't violate regulations (for instance, an AI advisor shouldn't accidentally recommend a product in a way that violates fiduciary rules). Compliance departments are now often involved in vetting AI solutions.

The financial sector sees generative AI as a tool to both cut costs and boost revenue through better customer engagement. McKinsey projected banking stands to gain one of the largest boosts from AI (up to $340B annually), largely via productivity improvements and operational efficiencies.

Technical Implementation Considerations

Adopting LLMs in a business setting is not as simple as flipping a switch. Companies must make a series of technical decisions and preparations to effectively and safely integrate these models into their systems and workflows.

Cloud APIs vs. Self-Hosting

Cloud APIs (Hosted Models): Providers like OpenAI, Anthropic, Google, and Cohere offer access to their LLMs via API endpoints. This approach requires no ML expertise or hardware, is quick to start, provides always up-to-date models, and offers easy scaling. However, costs accumulate on a per-request basis, there's potential latency, and data sent to the API leaves your environment, raising privacy concerns.

Self-Hosting (On-Prem or Cloud): With open-source LLMs like Meta's LLaMA 2 or EleutherAI's models, companies can run models on infrastructure they control. This provides full control over data (nothing leaves your servers), potentially lower variable costs for heavy usage, and deep customization options. However, it requires significant ML expertise, infrastructure management, and potentially lower performance compared to state-of-the-art proprietary models.

Model Customization Approaches

Prompt Engineering: The simplest approach involves crafting effective prompts that guide the model to produce desired outputs. This requires little technical expertise but limited customization.

Retrieval-Augmented Generation (RAG): Combines vector search of your own data with LLM generation. The system retrieves relevant documents from your knowledge base, provides them as context to the LLM, and generates responses grounded in your data. This approach helps prevent hallucination and allows the model to access up-to-date information.

Fine-tuning: Training the model further on your specific data to specialize it for particular tasks. This requires ML expertise and significant data but can yield highly customized models that better reflect your domain, terminology, and requirements.

Data Privacy and Security Considerations

LLMs raise significant privacy and security concerns, especially when handling sensitive business or customer data. Key considerations include:

- Data Transmission Security: Ensuring data sent to/from LLMs is encrypted in transit

- Model Training Data: Understanding whether your data will be used to train the provider's models (most enterprise offerings allow opting out)

- Data Retention Policies: Knowing how long your data is stored by providers and how it's protected

- Compliance Requirements: Meeting industry-specific regulations (HIPAA, GDPR, financial regulations)

- Personally Identifiable Information (PII): Implementing systems to detect and redact PII before sending to models

- Intellectual Property Protection: Ensuring business secrets aren't leaked or used for training

Infrastructure and Scaling

For companies using cloud APIs, infrastructure needs are minimal, but they still need to consider:

- API rate limits and how to handle high-traffic periods

- Cost management and optimization (e.g., using cheaper models for simpler tasks)

- Fallback mechanisms for when services are unavailable

For self-hosted models, infrastructure requirements are substantial:

- High-performance computing with GPUs (often multiple GPUs for larger models)

- Memory and storage capacity (models can be tens or hundreds of gigabytes)

- Load balancing and scaling for multiple concurrent users

- Model quantization to reduce compute requirements (at some cost to quality)

Implementation Challenges by Company Size

As shown in Figure 3, organizations face different challenges based on their size. Enterprise companies are more concerned with data quality and privacy (35%) and integration complexity (25%), while small businesses struggle primarily with cost and resources (40%) and talent gaps (25%). Mid-market companies face a more balanced set of challenges, with cost and resources (30%) being their primary concern.

These differences highlight the need for tailored implementation strategies based on organizational size and capabilities. Enterprises may need to focus on governance frameworks and integration, while smaller companies might benefit from managed solutions and skills development programs.

Strategic Impact Assessment

The strategic impact of LLMs goes far beyond technical implementation. Businesses incorporating these technologies effectively are experiencing transformation across multiple dimensions.

Productivity Acceleration

LLMs are dramatically increasing knowledge worker productivity. Content creators report 5-15% productivity boosts, developers see 55% faster coding speeds, and financial analysts can produce reports in 90% less time. These efficiency gains compound across organizations, enabling companies to do more with existing resources or reallocate talent to higher-value activities.

Customer Experience Enhancement

Customer-facing LLM applications are reshaping interactions and expectations. With 24/7 availability, personalized responses, and faster resolution times, AI-powered customer experiences are setting new standards. Companies report CSAT improvements of 15-40% after implementing LLM-powered support, and higher customer retention rates. The enhanced experience drives loyalty and increases lifetime customer value.

Innovation Acceleration

Product development cycles are being compressed by AI assistance. LLMs help in concept exploration, market research, content creation, and code generation. Early adopters report 30-50% reductions in time-to-market for new products and features. This speed advantage is particularly critical in competitive markets where being first can establish lasting advantages.

Organizational Transformation

The most profound impact of LLMs may be how they transform organizational structures and workflows. Key aspects include:

- Workforce Composition: As AI handles routine tasks, workforce needs shift toward higher-level thinking, creativity, and AI oversight. PWC notes in their 2025 predictions that "AI agents could easily double your knowledge workforce."

- Skill Requirements: Organizations are investing in upskilling employees to work effectively with AI. New roles like "AI Prompt Engineer" or "AI Ethics Officer" are emerging.

- Democratized Capabilities: Previously specialized skills (coding, data analysis, content creation) are becoming accessible to more employees through AI assistance, flattening organizational hierarchies.

- Decision-Making Speed: With faster access to insights and analysis, decision cycles are accelerating across organizations.

Competitive Landscape Disruption

LLMs are creating new competitive dynamics across industries:

- First-Mover Advantage: Early adopters are establishing competitive moats through proprietary data, customized models, and integrated workflows that will be difficult for laggards to overcome.

- Industry Convergence: With similar AI capabilities available across sectors, traditional industry boundaries are blurring. For example, tech companies are entering healthcare with AI-powered diagnostic tools.

- AI-Native Disruptors: New entrants built from the ground up around AI capabilities are challenging incumbents with more efficient operations and superior customer experiences.

- Value Chain Reconfiguration: As AI automates traditional value-adding activities, companies are rethinking where they focus their human resources and competitive differentiation.

"If we would make one prediction to sum up all the rest, it would be this: Your company's AI success will be as much about vision as adoption. That means that your AI choices may be the most crucial decisions not just this year but of your career."

Major LLM Provider Comparison

When selecting LLM providers, businesses need to evaluate performance, capabilities, deployment options, and cost structures. The landscape has several major players with different strengths and approaches.

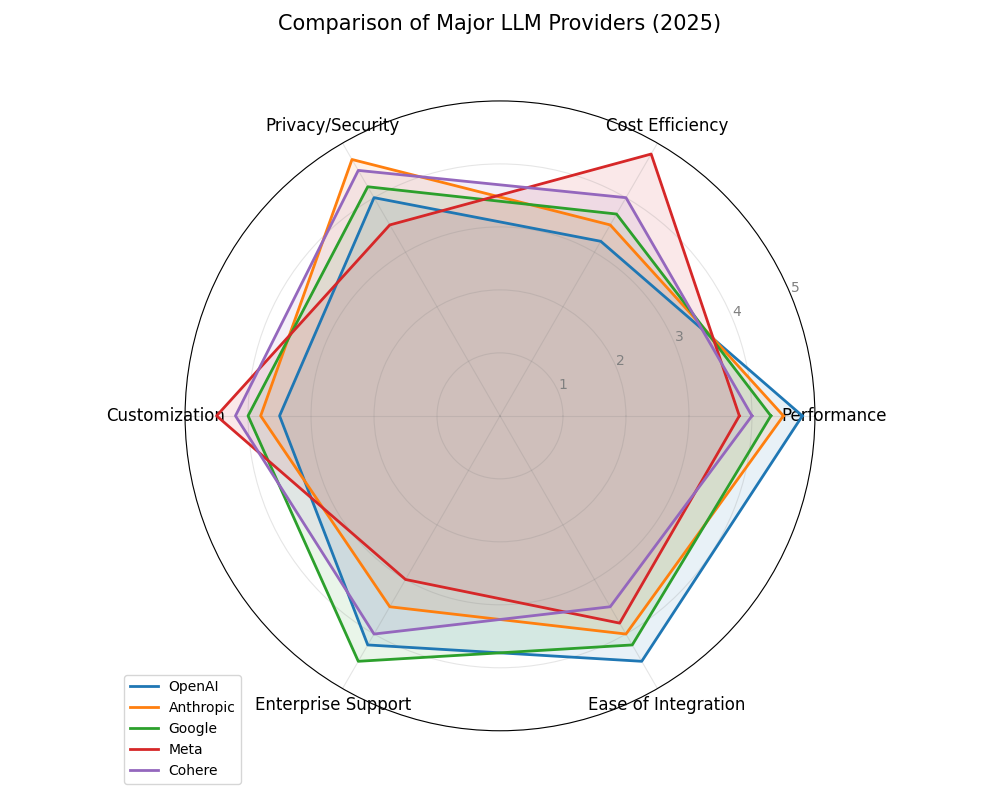

Figure 4: Comparison of major LLM providers across key dimensions relevant to enterprise deployment

Provider Analysis

| Provider | Key Models | Strengths | Limitations | Best For |

|---|---|---|---|---|

| OpenAI | GPT-4o, GPT-4 Turbo, GPT-3.5 | Industry-leading performance; Extensive documentation; Strong reasoning capabilities; Multimodal options | Higher cost; Limited customization; Potential data privacy concerns | Complex reasoning tasks; Creative generation; Customer-facing applications |

| Anthropic | Claude 3.5 Sonnet, Claude 3 Opus, Claude 3 Haiku | Extremely large context window (200K tokens); Strong safety focus; Transparent design philosophy | Newer to market with fewer integrations; Premium pricing for top models | Long document processing; Safety-critical applications; Nuanced conversations |

| Gemini 1.5 Pro, Gemini 1.5 Flash | Strong multilingual support; Deep integration with Google Cloud; 1M token context window | Complex pricing structure; Less optimization for specific business uses | Global/multilingual applications; Google Workspace integration; Research use cases | |

| Meta (Open) | Llama 3 (8B, 70B), Llama 3.1 (405B) | Fully controllable/deployable; No usage fees; Growing ecosystem; Commercial use allowed | Requires technical expertise to deploy; Performance gap vs. top proprietary models | Privacy-sensitive deployments; High-volume, cost-sensitive applications; Internal tooling |

| Cohere | Command R, Embed | Enterprise focus; Strong RAG optimization; On-prem deployment options | Less general awareness; Smaller developer community | Enterprise document retrieval; B2B applications; Command-line interfaces |

Selection Considerations

When choosing an LLM provider, consider:

- Task Complexity: More complex reasoning or creative tasks may require top-tier models like GPT-4o or Claude 3 Opus, while simpler tasks can use more cost-effective options.

- Data Privacy Requirements: Organizations with strict data sovereignty or privacy needs may prefer open-source models they can host themselves.

- Integration Needs: Consider how the model will integrate with existing systems and whether vendor-specific tools will accelerate implementation.

- Budget and Usage Volume: High-volume applications may favor open-source models for cost efficiency, while critical but lower-volume needs might justify premium APIs.

- Customization Depth: Consider whether you need to fine-tune models on proprietary data or if prompt engineering is sufficient.

Multi-Model Strategy

Many sophisticated organizations are adopting a multi-model approach, selecting different LLM providers for different purposes to optimize cost and capability. For example, a company might use OpenAI's GPT-4o for the most complex reasoning tasks but rely on a cheaper/faster model (like Anthropic's Claude 3 Haiku or an open-source model) for high-volume but simpler tasks.

This approach is facilitated by the growing ecosystem of orchestration tools that make it easier to switch between providers or select the optimal model for each query type. By implementing a flexible architecture, companies can remain agile as the provider landscape continues to evolve rapidly.

Future Outlook and Recommendations

As we look toward the horizon beyond 2025, several clear trends are emerging in the LLM landscape that will shape how businesses integrate and leverage these technologies.

Emerging Trends

- Multimodal Fusion: LLMs will increasingly work across text, images, audio, and video, enabling more natural and comprehensive interfaces. This will open new possibilities for applications like virtual assistants, content creation, and data analysis.

- Autonomous Agents: LLMs will evolve from reactive tools to proactive agents that can work autonomously on complex tasks, chaining multiple steps together without continuous human guidance.

- Domain-Specific LLMs: We'll see more specialist models fine-tuned for specific industries or functions (medicine, law, finance, etc.) that outperform general-purpose models in their domains.

- Efficiency Improvements: Models will become more efficient, running on less powerful hardware with lower latency, enabling edge deployment and reducing costs.

- Enhanced Reasoning: LLMs will develop stronger reasoning capabilities, improving their reliability for complex decision support and reducing hallucinations.

Competitive Implications

As LLM technology matures, the competitive landscape will continue to evolve:

- Widening Performance Gap: Organizations that have built strong AI capabilities will accelerate ahead of laggards, as their data advantages and implementation expertise compound.

- Value Chain Disruption: Traditional intermediaries in knowledge-intensive industries (consultants, brokers, etc.) will face pressure as LLMs enable direct access to expertise and insights.

- New Business Models: AI-first business models will emerge that weren't previously viable, challenging incumbents with fundamentally different approaches.

- Consolidation: In the LLM provider space, consolidation is likely as smaller players struggle to match the capital investment of leaders, though specialized niche providers will survive by targeting specific domains.

Strategic Recommendations

Based on current trends and future projections, organizations should consider the following strategic actions:

- Develop an AI Transformation Roadmap: Create a comprehensive, phased plan that moves beyond isolated use cases to integrate AI across the organization. Include near-term applications that can deliver quick wins alongside more ambitious, longer-term transformations.

- Invest in AI Literacy and Skills: Develop organization-wide AI literacy programs and specialized training for key roles. Consider creating centers of excellence to diffuse AI knowledge and best practices throughout the enterprise.

- Build a Robust Data Foundation: Invest in data quality, accessibility, and governance to maximize the value of LLM implementations. For many organizations, this will be the limiting factor in AI success.

- Adopt a Flexible AI Architecture: Design systems that can easily accommodate new models, providers, and capabilities as the technology evolves. Avoid locking into a single vendor or approach too deeply.

- Prioritize Responsible AI: Develop comprehensive governance frameworks to address ethics, bias, transparency, and compliance. This is not only a risk mitigation strategy but increasingly a competitive differentiator.

- Experiment with Human-AI Collaboration Models: Test different ways of combining human and AI capabilities to maximize the strengths of each. This will require rethinking workflows, incentives, and organizational structures.

"The most successful organizations will be those that find the right balance between human creativity and AI capabilities. This isn't about replacing humans with AI, but rather augmenting human intelligence and freeing people to focus on what they do best – innovation, relationship building, and strategic thinking."

Conclusion

Large Language Models have transitioned from experimental technology to essential business infrastructure in a remarkably short time. As we've seen throughout this report, organizations across sectors are realizing significant benefits from LLM integration, from productivity gains and enhanced customer experiences to accelerated innovation and new business capabilities.

However, successful implementation requires thoughtful consideration of technical options, organizational readiness, and strategic alignment. The gap between leaders and laggards in AI adoption is widening, making it increasingly urgent for organizations to develop coherent AI strategies that balance immediate returns with long-term transformation.

As the technology continues to advance at an unprecedented pace, organizations must stay informed, agile, and focused on the business outcomes they seek to achieve. Those that can effectively harness the power of LLMs while navigating the associated challenges will be well-positioned to thrive in an increasingly AI-driven business landscape.